Implementing AI Agents and Just-in-Time software applications involves more than simply plugging in an AI tool. It requires an integrated strategy combining people, processes, and technology.

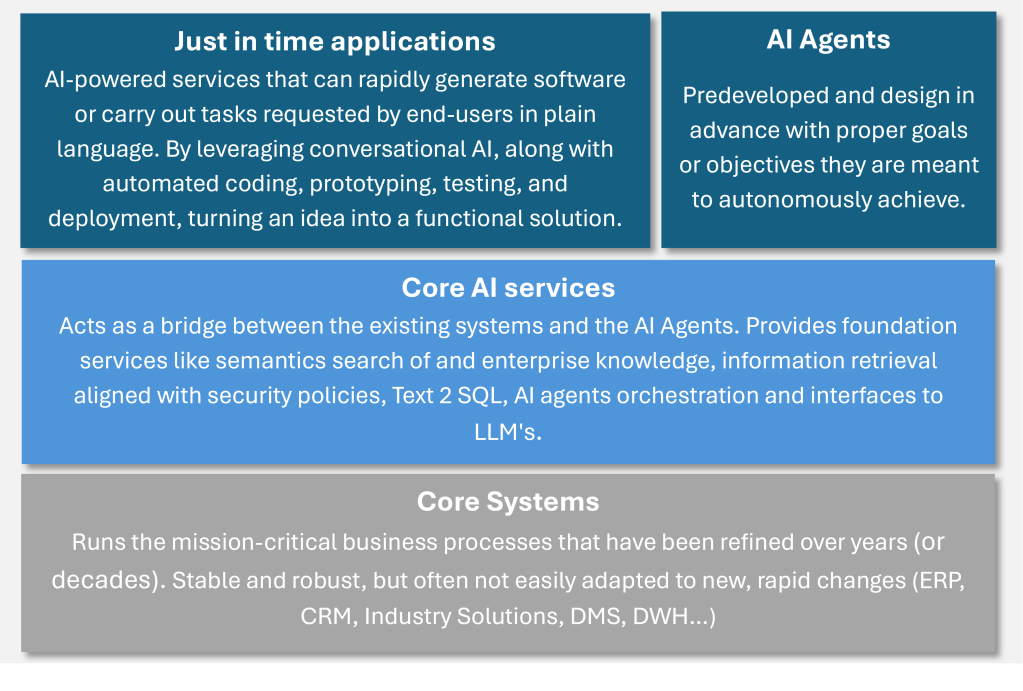

Figure below illustrates our end-to-end approach to enterprise architecture that powers AI Agents and JiT software

Core AI Services from Atoms and Bits

The Core AI Services, as an intermediary layer between enterprise systems and AI-driven applications, provide foundational capabilities essential for scalable, secure, and efficient AI operations. These services ensure seamless integration of AI agents with enterprise data, policies, and analytics frameworks.

1. Semantic Search & Enterprise Knowledge Retrieval

Enables AI agents to retrieve contextually relevant information across structured and unstructured enterprise data sources using advanced Natural Language Processing (NLP) and embedding-based search models.

- Vector-based Search Engine – Utilizes dense vector embeddings to perform semantic similarity searches.

- Hybrid Search – Combines full-text search (e.g., Elasticsearch) with vector search for high-precision results.

- Document & Knowledge Graph Indexing – Integrates with enterprise repositories (SharePoint, OneDrive, DMS) to build knowledge graphs for improved entity linking and reasoning.

- Retrieval-Augmented Generation (RAG) – Enhances AI-generated responses by dynamically retrieving relevant data from enterprise knowledge bases.

- Access Control & Role-Based Retrieval – Ensures search results comply with user permissions and enterprise data governance policies.

2. Information Retrieval Aligned with Security Policies

Provides AI-driven information retrieval while enforcing corporate security policies, ensuring that data access adheres to compliance and regulatory frameworks.

- OAuth2 & SAML-based Authentication – Supports authentication via Microsoft Entra ID (Azure AD) and other enterprise IAM solutions.

- Row-Level & Attribute-Based Access Control (ABAC) – Filters retrieved information based on user permissions and metadata-based policies.

- Data Masking & Redaction – Applies configurable policies to redact or mask sensitive information in AI-generated responses.

- Audit Logging & Compliance Monitoring – Tracks all retrieval activities for compliance with data security regulations (e.g., GDPR, ISO 27001).

3. Natural Language to Analytics (NL2A) for Enterprise Data Insights

Empowers users to generate analytical queries and retrieve business insights using natural language, bridging the gap between conversational AI and enterprise data analytics.

- Natural Language Reporting – Converts natural language questions into reports and visual dashboards.

- Multi-Platform Data Access – Integrates with enterprise data sources such as SAP HANA, Microsoft SQL Server, Snowflake, and Databricks.

- Context-Aware Analytics Generation – Understands database schemas, metadata, and business logic to construct precise analytical queries.

- Role-Based Data Access & Governance – Enforces security policies by restricting analytics results based on user roles and permissions.

4. AI Agent Orchestration

Manages the lifecycle, interactions, and task delegation among multiple AI agents, ensuring efficient task execution across different AI-powered applications.

- Multi-Agent Coordination Framework – Uses task-based delegation, parallel execution, and dependency resolution to optimize workflows.

- Context Sharing & Memory Management – Maintains contextual information across multiple agent interactions for continuity.

- Event-Driven Architecture – Integrates with enterprise event bus systems (e.g., Kafka, Azure Event Grid) to trigger AI agent actions based on real-time data streams.

- Workflow & Decision Automation – Enables AI agents to execute multi-step workflows based on predefined rules and machine learning-driven decision models.

5. Interfaces to Large Language Models (LLMs)

Standardizes API-based communication between enterprise applications and AI models, providing a flexible and scalable interface for LLM integration.

- Multi-Model Compatibility – Supports OpenAI GPT, DeepSeek, Claude, Mistral, and other LLMs via unified API endpoints.

- Streaming & Batch Inference – Enables real-time and bulk processing of LLM queries for optimized latency and throughput.

- Fine-Tuning & Customization – Allows integration of domain-specific fine-tuned models for enhanced accuracy in legal, financial, and technical use cases.

- LLM Cost & Latency Optimization – Implements intelligent model selection (e.g., using smaller models for simpler queries) to reduce costs while maintaining response quality.